Continuous Integration (CI) and Continuous Delivery (CD)

DevOps is quite literally the unification of the legacy development and operations silos–both in terms of word formation and meaning. That unification breeds improved collaboration and focus around the delivery process to power quality, innovation, and agility. Synergies radiate beyond the delivery process. For instance, in an automated CI/CD environment developers can more quickly iterate through changes with less cognitive load. The concerns of tracking outdated or [security] compromised dependencies and how their changes will negatively impact the larger code base are mostly shifted to automation.

Continuous Integration (CI) and Continuous Delivery (CD) are inextricably linked to DevOps. In simple terms, DevOps is the construct and CI/CD is its manifestation. In fact, the effectiveness of DevOps is measured by the four DevOps Research and Assessment (DORA) metrics that are all intimately tied to CI/CD. DORA’s Deployment Frequency, Mean Lead Time for Changes, Change Failure rate and even Mean Time to Recover are principally measurements of the CI/CD effectiveness. It should be noted that the DevOps domain continues to widen over time and many also consider Microservices, Infrastructure/Configuration/Policy as Code and Observability key DevOps tenants. However, the Sun in the DevOps Solar System remains CICD.

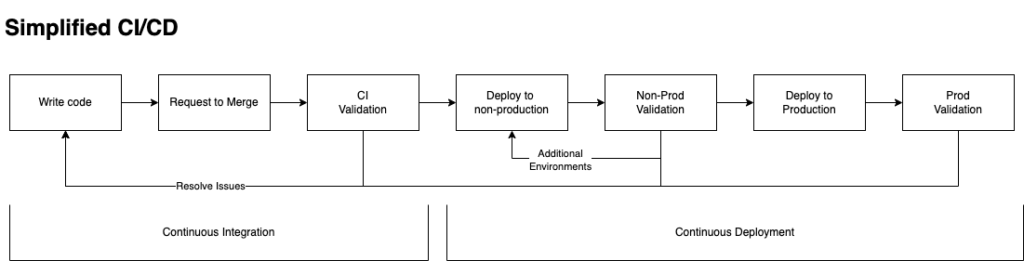

The above figure provides a high-level overview of the Continuous Integration and Continuous Delivery. The flow of this process is broken down into the following steps:

- Write Code. This typically takes the form of implementing a feature in a dedicated feature branch housed in a source code repository.

- Request to Merge. Once the developer feels they have completed the feature implementation, they make a request to merge their changes typically into the master branch. This process is referred to as a pull request in GitHub or merge request in GitLab.

- CI Validation. Before code is allowed to be merged it is validated. The process should include the automated [typically unit] testing of the entire merged codebase, automated [typically vulnerability and code quality] scanning of the merged code and a code review by someone other than the requestor. If any of those are unsuccessful, the code is not merged.

- Deploy to non-production. The first step in continuous deployment is to deploy to a non-production environment. This provides a mechanism to see how the newly updated code runs.

- Non-production validation. The validation of non-production environment(s) typically includes a mix of integration, functional end-to-end, chaos, performance and security tests. There may be several environments where deployments are serially and/or parallelly deployed to test different data and configuration conditions. It should be noted that term Continuous Deployment represents an implementation of Continuous Delivery that is completely automated with no user intervention. This is in contrast to scenarios that require a supervisor’s approval before further deployment(s).

- Deploy to Production. This is the culminating step in our CI/CD process where code has been deployed to production.

- Production Validation. It is important to understand we don’t deploy and forget. There is a step after production deployment. Any number of issues may happen with the deployment that may require its roll-back to a previous version or roll-forward to a new version. The deployment of code/data/configuration could be incomplete, smoke or synthetic tests could alert to an error condition, performance could be degraded, escape defects could be discovered, 0-Day vulnerabilities could be discovered, as well as a host of other issues.

You will notice there are several validation steps described above. These validations are frequently referred to as quality gates within the CI/CD process. Ideally, all your quality gates provide comprehensive validation so that they may be automated. Manual quality gates are a stop-gap for processes that haven’t been automated for one reason or another. An example is a code review on a pull request. Even though the tooling is getting better at providing guidance, a human may be preferred to provide a more nuanced review that is not yet fully possible with automation.

But, before we delve into Continuous Integration and Continuous Deployment it is important to understand their benefits.

Continuous Integration provides early detection of integration issues through frequent integration and testing of code changes. In turn, this provides faster feedback on changes which provides more efficiency in resolving any issues. Providing rapid feedback minimizes context switching and avoids widening issues by reversing course sooner. This brings with it an increased confidence in the code base, reduction of contemplation/research of how changes would negatively impact the codebase and a consequential increased velocity attributed to increased focus on the changes themselves.

Continuous Deployment reduces the risk of human error in the deployment process, improves the speed of delivery, reduces the lead time between committing a change and that change being available in production, improves the ability to quickly rollback changes, improves the quality through automated testing, and enables fast experimentation as well as iteration.

The central goals running through both of these processes are speed and quality. As we delve further, you will see those tenants guide the construction and continued evolution of a CI/CD system at every turn — such as branching strategy, tool configuration, and testing strategy.

Continuous Integration (CI)

Continuous Integration is a practice of frequently merging code into a central source code repository. Each merge is then automatically verified to quickly identify issues. Innovation is optimized when issues are resolved as early as possible. This avoids wasted time worsening an issue and backing out or refactoring an amalgamation of changes.

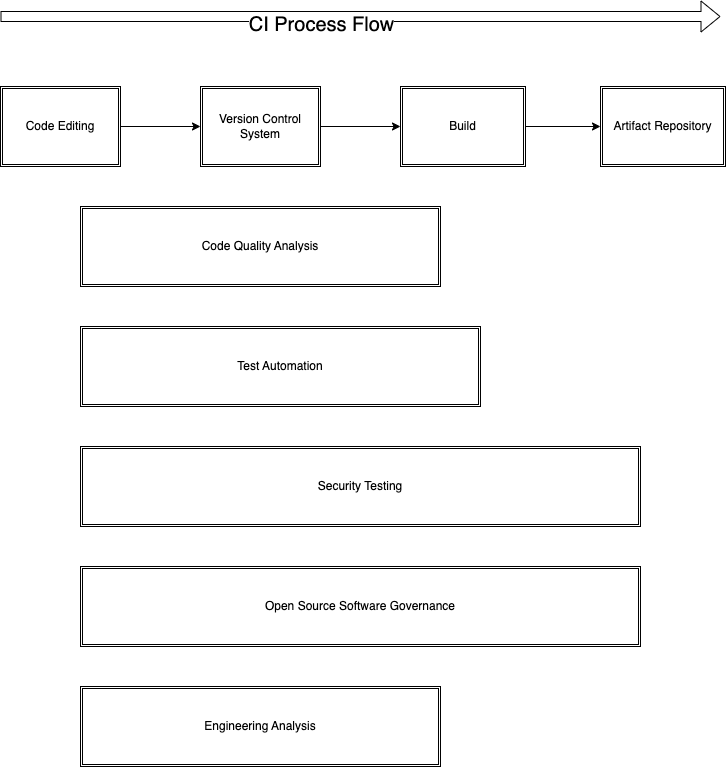

The main components of continuous integration include:

- Version Controls system (VCS). The VCS is used to manage and track code changes.

- Code Quality Analysis. These tools check the quality of the code and identify potential issues. Although the code may run, it stylistically may represent well known performance and maintainability inefficiencies. Examples include unused code (dead code), duplicated code, long method implementations, and ineffectual [or lack of] comments. Vendors have integrated this functionality into code editors, CI orchestration engines that pivot around VCS as well as integration at build time.

- Build Automation. This is used to compile, build and package code from your Version Control System.

- Test Automation. This is used to automatically test the code. Typical tests revolve around unit testing and in some cases light-weight integration tests. With that said, test automation is also an important component within continuous delivery. More sophisticated testing such as integration, end-to-end and performance testing are best suited in the continuous deployment stage. Additionally, you should endeavor to minimize built times so the team should be conscious of where is the best place to test and how those tests are implemented. Traditionally unit and other forms of CI testing were integrated into the build. There continues to be momentum to push left to alert to test issues and resolve them sooner by integrating them into the code editing environment.

- Security Analysis. These tools scan for vulnerabilities in your code base, its dependencies, and the packages generated from your build process. Typical categories of CI security tools include static application security testing, software composition analysis and container security. As in test automation, these tools were traditionally integrated into the build. As these tools have evolved to rapidly counter increasing security threats their integration across the CI has extended across the entire CI and CD processes.

- Artifact Repository. This stores the build artifact(s) from the continuous integration process. In turn, the continuous deployment processes retrieves these artifacts to deploy them to the appropriate targets. Many implementations of CI/CD make the mistake of being tightly integrated and lack a formal artifact repository. I can’t stress enough the importance of a loosely coupled system between CI and CD. In tightly coupled cases, after the package is built it is handed-off directly to the CD implementation to deploy. The advantage of a loosely coupled system and storing items in a repository is that it provides an established access point to monitor, query and control. For instance, security scans may be run periodically on the artifact repository to alert on packages with newly discovered vulnerabilities. Once issues are discovered, the repository may be configured to act as a firewall to disallow downloads to connected CD processes. If your CI/CD is tightly coupled, regular software composition vulnerabilities are inefficiently scanned on production servers or obfuscated on serverless platforms due to limitations in your tooling. Then when multiple applications deploy to these platforms it makes it difficult to quickly identify the source software product and unwind those vulnerabilities. In contrast, if your security tooling regularly scans your artifact repository, you can catch newly identified vulnerabilities and quickly identify the source team and product to immediately mitigate.

Not surprisingly, there are products that address individual components, multiple components, the complete CI orchestration, the complete CI/CD orchestration and every variation you can think of. Vendors have done a great job allowing integration with one another. The good news is that this allows for virtually unlimited customization for your process. The caveat is that some may consider the number of choices overwhelming. My point with the above list and more widely throughout this book is to provide a checklist of components to guide your journey to better software.

As previously mentioned, the list above highlights the main components of a CI system. There are other additional considerations teams include in their CI process that both overlap and skit some of the aforementioned component definitions.

- Open Source Software Governance. Open source software governance provides direction and control over your organization’s use of open source software. There are licensing, security, and legal aspects to consider when leveraging open source software. From a licensing standpoint, your applications must align with the licensing requirements of the open source software it uses. For instance, the GNU General Public License specifies that any project that builds on another creator’s work must be publicly available just like the original project. From a security standpoint, all software is suspectable to vulnerabilities. Tooling must be in place to identify and respond to vulnerabilities in the open source software teams have implemented. Additionally, there may be government and/or contractual obligations that limit the use of open source software in your organization’s applications.

- Engineering Analysis. These tools integrate with the source code version control system to analyze engineering effectiveness. They are not meant to be a quality gate. They are meant to help teams understand and improve how they develop software. One example use is to highlight work inefficiencies such as patterns of exceeding long delays in approving merges, a bottleneck and risk of only one person maintaining a critical part of the code and extensive rework pointing to possible unclear requirements or development inexperience in need of upskilling.

Figure 2 shows how the process flow of the aforementioned 8 components plus the addition of a code editing component in the top left corner. Traditionally the CI process started with the integration of a version control system with build automation. In an effort to find issues faster and consequently resolve them faster; tooling, testing and analysis have been extended (pushed) to the left in our CI processes. In addition to being pushing left, security testing and open source governance has been extended to the right as a means to constantly re-evaluate what was already built [and potentially deployed] for newly discovered threats.

Depending on your organizations needs and tooling choices, the exact availability, width and flow placement of the various governance components will differ. One of the key takeaways is to be aware and opportunistically introduce actionable governance earlier as a means to speed innovation. Shift left. The other key inference is that those same needs and tooling will influence the placement of your quality gates.

Especially to someone new to the subject, the talk of varied placement of governance checks can be a bit confusing. So the above discussion bears reiteration. Traditionally, most of the quality checks were integrated into the build process. As tools and processes have matured, vendors are providing governance capabilities prior to the build. One example is GitHub Enterprise that provides security feedback during the code editing process and prior to a formal CI build. Again, the benefit of this is to be alerted to and resolve issues faster.

As these tools mature, organizations are presented with options to enforce various types of governance during the build and/or during code editing. In addition to providing faster feedback, the other benefit of extricating a gate from the build process and moving it into the editing process results in a reduction of build times. This is advantageous when build times are substantial and teams are looking for ways to optimize them. Realizing there will always be edge cases, I generally recommend that governance is still integrated into the build process even when tooling provides for a shift left. Problems can still be found earlier, but a comprehensive analysis should be integrated into the build and the shifting left should reduce the number of issues detected during the build.

In cases where governance is adding substantial delays to the build, there are other alternatives to singularly shifting left and embedding it into the code editing process. One such alternative is to schedule periodic builds that includes the governance checks. On-demand builds that are desired to be streamlined, may opt to skip governance checks if those checks passed successfully in the last periodic build. This does bring a risk of potentially missing something that was recently added to the code base. In cases where the build needs to be optimized, teams may wish to customize this asynchronous approach per governance component to suit their needs.

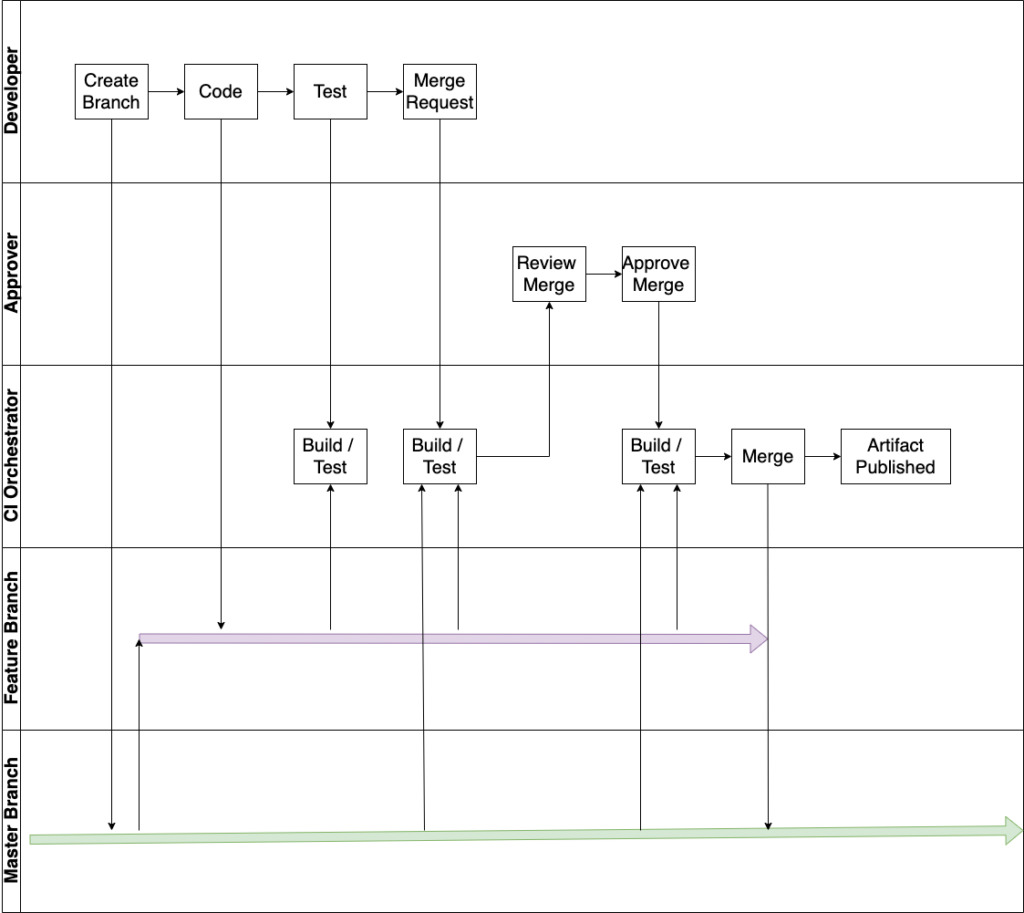

Drilling down, let’s walk through Figure 3 which is a more specific example of how a code change makes it way through the CI process. This example employs a GitHub flow which is the most advantageous branching strategy for CI/CD. Again, the primary goals of CI/CD are speed and quality. The GitHub flow is focused around frequently and rapidly being able to integrate with master. The master branch can then be rapidly deployed all the way to production. Other flows that employ cherry picking features and/or dedicated release branches are intermittently slowed down by elongated merge processes. This increases the likelihood of finding issues later and the associated increase in work to resolve those issues.

The major steps of Figure 3 are summarized below.

- Developer -> Create Branch. The developer creates a branch from master that represents the base for which they will add a new feature.

- Developer -> Code. The developer adds code to the feature branch to implement the new feature.

- Developer -> Test. The developer tests their code before attempting to add it to the main code base. The build should perform a mix of unit tests, code analysis and security analysis. In addition, the developer should deploy this branch to a development server to provide some measure of runtime testing. Typically, a CD process will be integrated into a selected feature branch to facilitate this run-time testing. Presuming there are no errors nor issues, the developer will proceed to request a merge.

- Developer -> Merge Request. After ensuring that the new feature works, the developer will request to merge their code with the code base. In this example, an human approver will decide whether to perform the merge after reviewing the changes. Everyone’s is valuable. The proposed merge is built and tested first so that an approver doesn’t waste their time reviewing issue plagued changes. Following the successful build and test, the approver is prompted to review.

- Approver -> Review Merge. The approver reviews the code changes and any issues highlighted in the build tests.

- Approver -> Approve Merge. If the approver is satisfied with the changes, they initiate a merge. . The proposed merge is build and tested once again. The reason for this is that other merges made between when the developer submitted their merge and when the merge was approved may result in a new conflict. The need for this repetitive check underscores the potential consequences of delays in reviewing merge requests. That is why many Engineering Analysis tools track the average time to approve a merge request. If the proposed merge is found to have no issues, the merge is completed and then a build artifact is published.

One of the key tenants of CI is regularly merging. As teams grow in size, code changes tend to have an increasing impact on others. When teams practice infrequent check-ins, there is increased difficulty with merge conflicts and an increased tendency to break code. Merging frequently helps team members realize faster the impact of their changes. It avoids backing out a breaking change along with everything else that was coded after the breaking change. Realizing every situation is different, I generally recommend team members merge their code at least once per day.

Teams should endeavor to only add “healthy” code to their code base. Your CI process should be designed with quality gate(s) to avoid the addition of unhealthy code. Extending that analogy, injecting unhealthy into a code base will slowly infect it. Before you know it your code base will have technical debt syndrome. Your team’s mindset should be akin to the Hippocratic Oath’s “do no harm”.

Although there is a burgeoning industry of tools that help automate the build process, many teams still rely on peers (humans) to review a merge. The reviewer(s) of the merge should not be a speed bump. They should spend time reviewing the tooling analysis and thinking about how these changes will impact the code. They are apart of the “quality gate” to avoid the addition of unhealthy code such as temporary fixes, code that breaks other parts of the code base, or code that is difficult to maintain.

Code submitters and reviewers should me mindful of the purpose this process and considerate of each other’s time. Simply put, they should ensure their interactions don’t waste the other’s time. For example, providing a reviewer with clearly written code along with a summary of your changes will reduce the time reviewing the changes.

Conversely, delays in reviewing code from when it was summitted will negatively impact the submitter. As time goes by with more team members checking in code, their will be a greater chance of merge conflicts that have to be resolved. Additionally, because context switching can be time-consuming the submitter may stop working while waiting for timely feedback. If feedback is not timely and edits are requested, the submitter mostly likely would have moved on to another task and will have to context switch back. Depending on the length of the delay, the submitter may have forgotten a large portion the code’s details and may need to spend a substantial time reinterpreting their old code. Just as submitters should be mindful of what they are submitting, reviewers should be mindful of communicating constructive and precise required improvements.

Continuous Deployment (CD)

More to come.